Samir Chopra

Philosophical Counseling

Welcome to my online home for my writing and philosophical counseling practice.

Philosophical Counseling

Philosophical counseling aims to fulfill philosophy’s perennial promise and responsibility: ‘Empty are the words of that philosopher who does not offer therapy for human suffering. Just as there is no use in medical expertise if it does not give therapy for bodily diseases, so too there is no use in philosophy if it does not expel the suffering of the soul.’ Epicurus (341–271 BCE)

Writings

Books

Anxiety: A Philosophical Guide

Anxiety explores ancient and modern philosophies, which suggest that anxiety is a normal, essential part of being human; to be is to be anxious. Inseparable from the human condition, anxiety is indispensable for grasping it. Realizing this is transformative, allowing us to live more meaningful lives by giving us a richer understanding of ourselves. Philosophy may not cure anxiety but, by leading us to greater self-knowledge and self-acceptance, it may be able to make us less anxious about being anxious.

The Moral Psychology of Anxiety

The Moral Psychology of Anxiety presents new work on the causes, consequences, and value of anxiety. Straddling philosophy, psychology, clinical medicine, history, and other disciplines, the chapters in this volume explore the meaning of anxiety in different philosophical traditions and historical periods, and inquire into anxiety’s nature and significance.

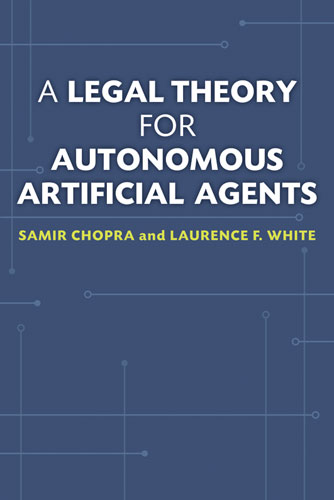

A Legal Theory for Autonomous Artificial Agents

(with Laurence F. White)

If artificial intelligence (AI) technology advances as today’s leading researchers predict, these agents may soon function with such limited human input that they appear to act independently. When they achieve that level of autonomy, what legal status should they have?

Decoding Liberation

(with Scott D. Dexter) Software is more than instructions for computing machines: it enables, and disables, political imperatives and policies. We ask, “What are the freedoms of free software, and how are they manifested?”

Essays

‘There are two ways in which philosophy can help us with anxiety: a specific doctrine may offer us a prescription for how to rid ourselves of anxiety; and philosophical method may help us understand our anxiety better.‘Anxiety’ is inchoate, a formless dread; why do we feel it, and must we suffer it? Philosophy’s answer is that anxiety is a constitutive aspect of the human condition; we must live with it. We will always be anxious in some measure, but we do not have to be anxious about being anxious.’

– from ‘On Not Being Anxious About Anxiety’

From the Blog

Refusing to Stick to the Subject

- Book Release: “Anxiety: A Philosophical Guide”I’m pleased to make note here of the release, on March 19th, of my book Anxiety: A Philosophical Guide published by Princeton University Press. Here is the book’s description and cover:

- The Worst Kind of ListenerThe worst kind of listener isn’t the one that is patently distracted (by thumb-flashing smartphone interaction or some endless performative scrolling), cannot make eye contact, shows through their follow-up questions that they weren’t paying attention anyway, rolls their eyes, orContinue reading “The Worst Kind of Listener”

- The Anxieties Of A Beautiful DayThat mysterious, terrible anxiety felt on a beautiful day–whether that of the spring, summer, fall, or winter–is perhaps better understood when we realize that such anxiety is not one, but many anxieties. To wit, that anxiety is: The anxiety ofContinue reading “The Anxieties Of A Beautiful Day”